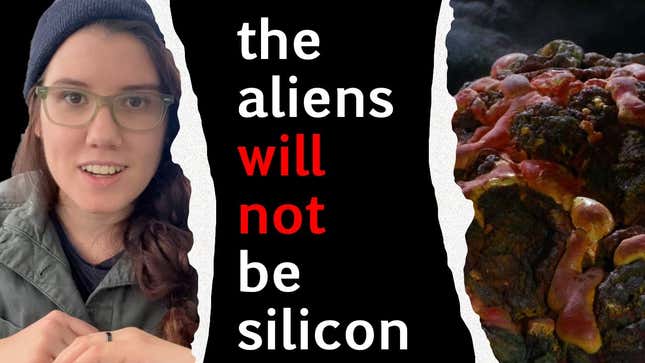

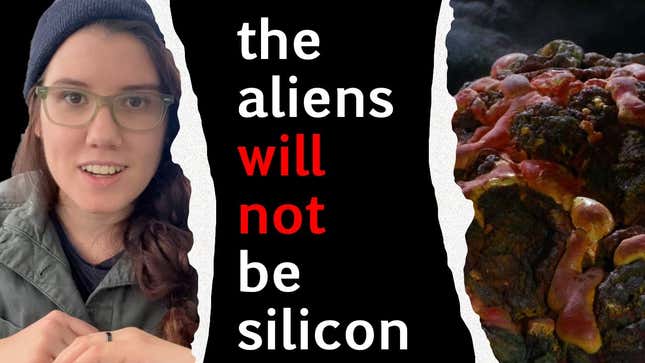

There’s an excellent YouTube video exploring why silicon based life is likely extremely rare, and why we should mostly expect life (as we know it) to be carbon-based in most circumstances:

There’s an excellent YouTube video exploring why silicon based life is likely extremely rare, and why we should mostly expect life (as we know it) to be carbon-based in most circumstances:

This exactly. Most people drive <40 miles a day, and usually not all at once. That means that 39 miles of all electric range, with any amount of charging during the day, would be more than enough to cover 90% of the driving people do without burning a drop of gas.

This exactly. Most people drive <40 miles a day, and usually not all at once. That means that 39 miles of all electric range, with any amount of charging during the day, would be more than enough to cover 90% of the driving people do without burning a drop of gas.

No, I actually do think it’s ok to complain about an inherently undemocratic system making decisions for everyone based on the whims of the handful of people that have the most time on their hands to show up to meetings. It’s also why I think mandatory public comment for a lot of local government issues is also…

You know that not all disabilities are visible, and not everyone who uses a wheelchair needs to use a wheelchair at all times right?

I think the pandemic hit them especially hard on the margins. I don’t have anything but anecdotes to back this up, but IMO they’ve definitely lost some competitive advantages which used to make them my go-to. I still fly them plenty but you’re right, their prices and timeliness have suffered.

I really like Southwest as well and I don’t understand the haters. Unless you’ve got high level status, are rich, or lucky, pretty much every carrier these days is just operating a bus that flies. At least Southwest doesn’t nickel-and-dime you for the privilege, and is relatively humane about their booking policies.

Most of Southwest’s flights are sub 4 hour trips though. They only fly 737s, transcontinental flights are about as far as they’ll go. Even then AFAIK Southwest doesn’t run a lot of transcontinental flights.

FWIW the show didn’t do itself any favors. Like, even tickling the idea of purgatory at the end was very obviously playing with fire. given how popular that theory was. That they went with a purgatory-adjacent ending but tried to switch it up stunk of desperation more than subversion, suggesting it was all a hasty…

Honestly nobody should be allowed to work into their 70s. Low impact professions where people can work until they die are facing a real crisis because there are way too many old people at the top who are not making way for young people to move up. Doctors and lawyers working into their 70s and 80s means even well…

Gen Xers and Millennials aren’t that far apart in terms of lifestyle, but there’s an appreciable difference between us in terms of wealth. According to the numbers Gen Xers were able to start building wealth a lot younger than millennials, getting a start during the alte 90s and early 200s boom years. Millennials on…

I had the same issue!

I disagree with your “emotionally manipulative tripe” take but agree with everything else you’ve said. The entire time I was playing TLOU2 I kept thinking “Why is this a game and not a movie or a TV show?” There’s nothing about the actual story that is enhanced by being a game; in fact, I’d argue the ending is made…

I’m not sure how this is even a real take. It’s a movie about space wizards, not The Expanse. The Force doesn’t even have much internal story logic in the movies other than “it’s hard to use, you have to train to do so, and it can be physically taxing to do so.” Magic is magical, and Star Wars has always cared more…

Because a lot of people (myself included) including many bloggers think TLJ is a very, very good movie? This isn’t a conspiracy, it’s a difference of opinion.

Seems to me it’s at least as likely that all of these tests are measuring some unknown variable as they are measuring intelligence or aptitude. I remember during LSAT prep being told that LSAT scores correlated strongly with law school grades, and law school grades correlate strongly with eventual success in the…

I agree that going to the doctor is only just now appropriate. 60% of couples successfully conceive within 6 months of trying, so 6 months is a good point to explore if there are medical issues. But I disagree that the episode leaping immediately to IVF and adoption was the right call. I would expect the doctor visit…

Your argument is essentially “Assuming that these facts I’ve simply asserted are correct, and that you’re actually a lying liar, then I’m right and you’re a monster.” How is that an argument?

All I’m asking for is to be able to fit in the seat I paid for without someone actively trying to make that impossible. Others are asking that not only should they be able to fit in their seat, they should also be allowed to recline regardless of others’ comfort. Recliners are being selfish, I’m just asking for a…

All that means is that you’re not a hypocrite. It doesn’t mean you don’t devalue other peoples’ comfort and overvalue your own.